Refactorings are an integral part of our developer life. It would be nice to always be able to start from scratch, implement new features and realize new ideas. However, we are much more frequently confronted with having to readjust existing source code. Refactorings play a crucial role in this context, especially when we think of legacy code. The addition of a requirement or the elimination of errors is often associated with the implementation of a refactoring. We are often given the task of tidying up an untested code base, which many developers shy away from.

An example from my early developer years looks something like this (slightly embellished by KI):

One day, my boss came to me with a tricky mission - I was to carry out a refactoring that would make a new feature seamlessly integrated. He gave me exactly one week and looked at me expectantly: "Can you do it?" I quickly skimmed the code, nodded and agreed. I created a refactoring branch, started my IDE and got to work.

At first, everything seemed to be going according to plan. Only testing wasn't exactly my favorite discipline at the time and so the code remained almost as untested as before. What started out as a well-structured endeavor soon turned into a minefield. Unexpected compiler errors were exploding left and right, undetected behavioral changes were creeping in, and hidden code dependencies were popping up like ghosts out of nowhere. By the time the first week had passed, I had already missed the deadline. This triggered a wave of stress and nervousness. I was programming under high pressure with sweat pouring down my forehead, driven by the desperate goal of not wasting any more precious time. What was planned as a one-week project dragged on agonizingly for three weeks, and I was at the end of my tether when everything was finally merged into the trunk.

Does that sound familiar? Fortunately, refactoring doesn't have to be chaotic. There are numerous books and methods developed to help us take an orderly approach. Ultimately, it's about creating a structure that allows refactoring to be done in a planned, predictable and deliberate way - without any belly aches. In this article, I explain how you can achieve this goal. in four steps can achieve.

1. analysis

Analysis is the first phase of refactoring, with which you are certainly familiar. Refactoring is not possible without an analysis. In this phase, we take a close look at the code to determine the scope of the tasks ahead. This phase has three main objectives.

- The first step is to familiarize yourself with the code. Regardless of whether the code base is known to us or not, we need to take time to understand its current structure.

- Once we have understood the code, let's move on to the second objective: Finding a suitable entry point. A complex refactoring must be justified, because it is associated with costs, but does not itself generate any productive added value. The two reasons for carrying out a refactoring are Implementation of a new feature or the Correction of an error. For both scenarios, we find a place in the code base to start the implementation.

- Once the starting point has been determined, we move on to the third destination: defining the framework for refactoring. This framework can comprise a method, a class or even a more extensive module, depending on the refactoring requirements. This framework is our starting point for the next steps.

The procedure should be known so far, because without the analysis we cannot even carry out unstructured refactoring. We can't start the implementation until we know where we want to start and what we want to clean up

2. place under test

Why is it important to put code under test before refactoring? Let's look at Michael Feathers' original definition of legacy code: "Legacy code is untested code". A simple statement and at the same time the biggest challenge if we want to refactor within such code. The basic principle of refactoring is: Existing behavior must not be changed! How do I guarantee this? With tests. That's why we have to put the entire code snippet that is to be refactored under sufficient testing. We often encounter a dilemma: the code has a structure that makes it (almost) impossible to create simple tests. Refactoring is therefore needed to bring the code into a testable structure. At the same time, untested code should not be refactored. Fortunately, there are various approaches that can support us.

Testing complex functions in legacy code using standard unit tests can be challenging. To create effective unit tests, we need to understand the behavior that the method represents. A unit test is only verifiable if we already know what result to expect with given test data. Approval tests are a practicable alternative. These are often much easier to implement, especially with legacy code. Plus: We don't even need to know the tested behavior. In this article I present approval tests.

By using certain techniques and IDE-supported refactoring methods, code that is difficult to test can be broken down piece by piece. The use of exclusively IDE-supported methods guarantees a very low risk of inadvertently changing the existing behavior. The isolated elements can then be tested. Here is an impressive Demonstration by Llewellyn Falco to this approach.

Another option is testing without having to work directly in the code. System tests, also known as end-to-end tests, check the application through the UI. They use the user interface to simulate a use case - a specific requirement that the user places on the system - and verify the resulting outcome. If we cannot create unit or integration tests for the code snippet to be refactored, we must determine which use cases are influenced by this logic. These use cases should then be covered with end-to-end tests. This can be a challenging undertaking, end-to-end tests are time-consuming. In return, however, we get the certainty that the refactoring will not change the affected use cases. And let's be honest: the effort now arises because we didn't work cleanly beforehand and did without tests!

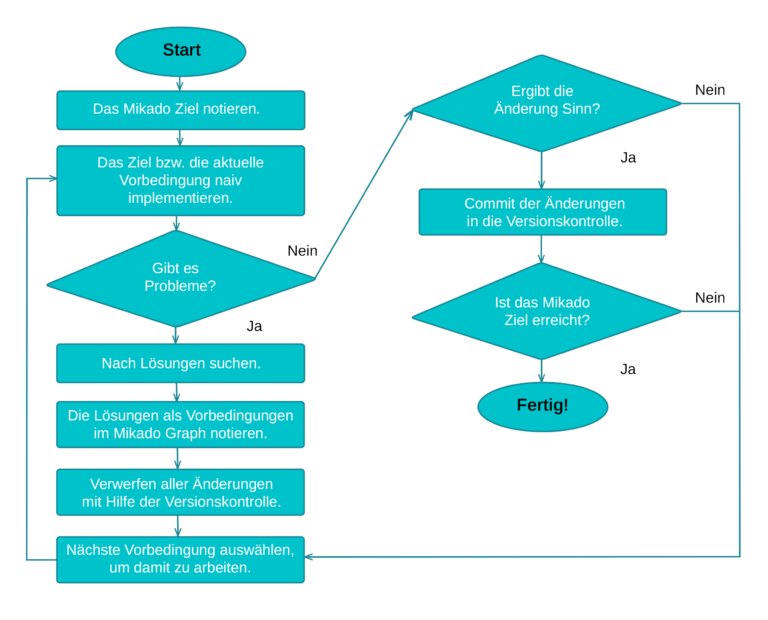

The third phase helps us to create a refactoring structure. By experimenting on the already analyzed and tested code snippet, we determine the actual amount of refactoring required. We carry out these experiments using a specific method known as Mikado method. The aim of this method is to identify all dependencies associated with our planned refactoring. Through the experiments, we uncover challenges that we may have overlooked in the analysis phase. At the same time, we check whether our refactoring strategy leads to a good result. If necessary, we adjust the strategy accordingly. The result is a detailed graph that visualizes every single component and step of our refactoring. This graph serves as the basis for our implementation. Stefan Lieser gives in these videos Insight into the procedure of the method.

4. implementation

The Mikado graph opens up new possibilities for designing the implementation. Thanks to the previous experiments, we are no longer working with unknown source code. We can also better estimate the overall scope of the refactoring. Each step can be evaluated separately in terms of the time required. Since an experimental implementation has already taken place, we can estimate the time required more realistically.

The structure also allows us to apply different implementation strategies. The implementation can take place in a continuous process or be divided into sub-steps. If the refactoring is under time pressure, there is the option of using several developers in parallel and thus scaling the implementation. Alternatively, the implementation can also be staggered over a longer period of time in order to provide more capacity for other tasks at the same time. Structure means being able to make decisions instead of having to react to unforeseen events.

Conclusion

Developers do refactorings and often work with legacy code. For many, the green field is just a distant dream. There must be no room for chaotic approaches in legacy code. My exaggerated scenario in the introduction is familiar to many and generally unpopular. Fortunately, it only takes a little structure to escape this scenario. These four "small" steps make all the difference. The methods and tools I have presented here are easy to integrate into a refactoring process and enable a plannable, predictable and structured approach. Adío's bellyache.

In addition to the links in the article, I would like to our book recommendations on the subject of refactoring. The works presented there are an excellent basis!

For all those who would like some guidance and practical exercises, I recommend our seminars. Feel free to get in touch with us Connection and together we will design a training course tailored to your needs.